Basic network technologies. Network technologies of local networks. How GCS technologies developed

Every day, to gain access to services available on the Internet, we access thousands of servers located in various geographical locations. Each of these servers is assigned a unique IP address by which it is identified on the connected local network.

Successful communication between nodes requires the effective interaction of a number of protocols. These protocols are implemented at the hardware and software level of each network device. The interaction between protocols can be represented as a protocol stack. The protocols in a stack are a multi-level hierarchy in which the top-level protocol depends on the protocol services at lower levels.

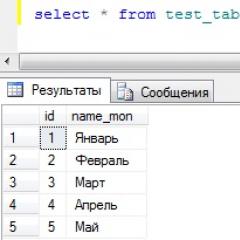

The graph below shows the protocol stack with the set of primary protocols required to run a web server over an Ethernet network. The lower layers of the stack are responsible for moving data across the network and providing services to the upper layers. The upper levels are largely responsible for the content of forwarded messages and the user interface.

It would be impossible to remember all the IP addresses of all the servers that provide various services over the Internet. Instead, an easier way to find servers is to match the name with some IP address. The Domain Name System (DNS) allows you to use a hostname to query the IP address of an individual server. Registration and organization of names in this system is carried out in special high-level groups called domains. Some of the most popular top-level domains on the Internet include .com, .edu, and .net. The DNS server contains a special table that associates host names in a domain with the corresponding IP address. If a client knows the name of a server, for example a web server, but needs to find an IP address, it sends a request to this DNS server via port 53. The client uses this IP address of the DNS server specified in the DNS settings of the IP configuration section of this node . Upon receiving a request, the DNS server uses its table to determine whether there is a match between the requested IP address and the web server. If the DNS server does not have a record for the requested name, it queries another DNS server within its domain. After recognizing the IP address, the DNS server sends the result back to the client. If the DNS server is unable to resolve the IP address, the client will be unable to contact that web server and will receive a timeout message. The process of determining an IP address using the DNS protocol from client software is quite simple and transparent to the user.

In the process of exchanging information, the web server and web client use special protocols and standards to ensure that information is received and read. These protocols include the following: application layer protocols, transport protocols, internetworking and network access protocols.

Application Layer Protocol

The Hypertext Transfer Protocol (HTTP) manages the interaction between a web server and a web client. The HTTP protocol specifies the format of requests and responses to requests sent between the client and server. To control the process of passing messages between the client and server, HTTP uses other protocols.

Transport protocol

Transmission Control Protocol (TCP) is a transport protocol that manages individual communication sessions between Web servers and Web clients. The TCP protocol divides hypertext messages (HTTP) into segments and sends them to the end host. It also performs data flow control and confirms the exchange of packets between nodes.

Internet Protocol

The most commonly used internetworking protocol is the Internet Protocol (IP). The IP protocol is responsible for receiving formatted segments from TCP, assigning local addresses to them, and encapsulating them into packets for routing to the end host.

Network access protocols

In local networks, the Ethernet protocol is most often used. Network access protocols perform two main functions - managing data transmission channels and physically transmitting data over the network.

Data link control protocols accept packets from the IP protocol and encapsulate them in the appropriate LAN frame format. These protocols are responsible for assigning physical addresses to data frames and preparing them for transmission over the network.

Physical data transmission standards and protocols are responsible for representing the bits in the transmission path, selecting the method for transmitting signals, and converting them at the receiving node. Network interface cards support the corresponding data path protocols.

Each service accessible over the network has its own application-level protocols supported by the server and client software. In addition to application-layer protocols, all common Internet services use the Internet Protocol (IP), which is responsible for addressing and routing messages between source and destination nodes.

The IP protocol is only responsible for the structure, addressing and routing of packets. IP does not define how packets are delivered or transported. Transport protocols specify how messages are transmitted between nodes. The most popular transport protocols are Transmission Control Protocol (TCP) and User Datagram Protocol (UDP). The IP protocol uses these transport protocols to provide communication and data transfer between nodes.

If an application requires confirmation of message delivery, it uses the TCP protocol. This is similar to the process of sending registered mail in the regular postal system, where the recipient signs the receipt to confirm receipt of the letter.

TCP breaks the message into smaller pieces called segments. These segments are numbered sequentially and passed to the IP protocol, which then assembles the packets. TCP keeps track of the number of segments sent to a particular host by a particular application. If the sender does not receive an acknowledgment within a certain period of time, then TCP treats these segments as orphaned and resends them. Only the lost portion of the message is resent, not the entire message.

The TCP protocol at the receiving node is responsible for reassembling the message segments and forwarding them to the appropriate application.

FTP and HTTP are examples of applications that use TCP to deliver data.

In some cases, Delivery Confirmation Protocol (TCP) is not required because it slows down the data transfer rate. In such cases, UDP is the more appropriate transport protocol.

The UDP protocol performs non-guaranteed delivery of data and does not require confirmation from the recipient. This is similar to sending a letter by regular mail without a delivery receipt. Delivery of the letter is not guaranteed, but the chances of its delivery are quite high.

UDP is the preferred protocol for streaming audio, video, and voice over Internet Protocol (VoIP). Confirming delivery will only slow down the data transfer process, and redelivery is not advisable.

An example of the use of the UDP protocol is Internet radio. If any message gets lost along the network delivery path, it will not be resent. The loss of several packets will be perceived by the listener as a short-term loss of sound. If you use the TCP protocol for this, which provides for the re-delivery of lost packets, then the data transfer process will be suspended to receive the lost packets, which will significantly degrade the quality of playback.

Simple Mail Protocol (SMTP)

The SMTP protocol is used by the mail client program to send messages to the local mail server. Next, the local server determines whether the message is addressed to a local mailbox or a mailbox on another server.

The SMTP protocol is used when communicating with different servers, for example, if you need to send a message to other servers. SMTP requests are sent to port 25.

Postal Protocol (POP3)

A POP server receives and stores messages for its users. Once a connection is established between the client and the mail server, messages will be downloaded to the client's computer. By default, messages are not saved on the server after they are read by the client. Clients access POP3 servers on port 110.

IMAP4 protocol

The IMAP server also receives and stores messages addressed to its users. However, messages may remain in users' mailboxes unless they are explicitly deleted by the users themselves. The most recent version of the IMAP protocol, IMAP4, listens for requests from clients on port 143.

Different network operating system platforms use different mail servers.

Instant Messaging (IM) is one of the most popular information exchange tools today. Instant messaging (IM) software running on local computers allows users to interact in real-time messaging windows or chat sessions over the Internet. The market today offers many instant messaging programs from various development companies. Each instant messaging service may use specific protocols and end ports, so compatible software must be installed on two different hosts.

A minimal configuration is sufficient to run instant messaging applications. After downloading the client application, simply enter your username and password. This operation is required to authenticate the IM client at the entrance to the instant messaging network. After logging into the server, clients can send messages to other clients in real time. In addition to text messages, the IM client supports the transfer of video, music and voice files. IM clients support the phone feature, which allows users to make phone calls over the Internet. There are additional options for customizing the “Contact List”, as well as personal design styles.

IM client software can be downloaded and used on all types of devices, including: computers, PDAs and cell phones.

Today, telephone calls over the Internet are becoming increasingly popular. Internet telephony client applications implement peer-to-peer technology, which is similar to instant messaging technology. IP telephony uses Voice over IP (VoIP) technology, which uses IP packets to transmit digitized voice data.

To get started with Internet Phone, download client software from one of the companies that offers this service. Rates for using Internet telephony services vary depending on the region and provider.

After installing the software, the user must select a unique name. This is necessary to receive calls from other users. Speakers and a microphone, built-in or external, are also required. A headset connected to a computer is often used as a telephone.

Calls are established with other users using the same service by selecting names from a list. Establishing a call to a regular telephone (land line or cellular phone) requires a gateway to access the public switched telephone network (PSTN).

The choice of protocols and end ports used in Internet telephony applications may vary depending on the type of software.

Network technology - this is an agreed set of standard protocols and software and hardware that implement them (for example, network adapters, drivers, cables and connectors), sufficient to build a computer network. The epithet “sufficient” emphasizes the fact that this set represents the minimum set of tools with which you can build a working network. Perhaps this network can be improved, for example, by allocating subnets in it, which will immediately require, in addition to standard Ethernet protocols, the use of the IP protocol, as well as special communication devices - routers. The improved network will most likely be more reliable and faster, but at the expense of add-ons to the Ethernet technology that formed the basis of the network.

The term “network technology” is most often used in the narrow sense described above, but sometimes its expanded interpretation is also used as any set of tools and rules for building a network, for example, “end-to-end routing technology,” “secure channel technology,” “IP technology.” networks."

The protocols on which a network of a certain technology is built (in the narrow sense) were specifically developed for joint work, so the network developer does not require additional efforts to organize their interaction. Sometimes network technologies are called basic technologies, bearing in mind that the basis of any network is built on their basis. Examples of basic network technologies include, in addition to Ethernet, such well-known local network technologies as Token Ring and FDDI, or X.25 and frame relay technologies for territorial networks. To obtain a functional network in this case, it is enough to purchase software and hardware related to the same basic technology - network adapters with drivers, hubs, switches, cable system, etc. - and connect them in accordance with the requirements of the standard for this technology.

Creation of standard local network technologies

In the mid-80s, the situation in local networks began to change dramatically. Standard technologies for connecting computers into a network have been established - Ethernet, Arcnet, Token Ring. Personal computers served as a powerful stimulus for their development. These commodity products were ideal elements for building networks - on the one hand, they were powerful enough to run networking software, but on the other, they clearly needed to pool their computing power to solve complex problems, as well as share expensive peripherals and disk arrays. Therefore, personal computers began to predominate in local networks, not only as client computers, but also as data storage and processing centers, that is, network servers, displacing minicomputers and mainframes from these familiar roles.

Standard network technologies have turned the process of building a local network from an art into a routine task. To create a network, it was enough to purchase network adapters of the appropriate standard, for example Ethernet, a standard cable, connect the adapters to the cable with standard connectors and install one of the popular network operating systems on the computer, for example, NetWare. After this, the network began to work and connecting each new computer did not cause any problems - naturally, if a network adapter of the same technology was installed on it.

Local networks, in comparison with global networks, have introduced a lot of new things into the way users organize their work. Access to shared resources became much more convenient - the user could simply view lists of available resources, rather than remember their identifiers or names. After connecting to a remote resource, it was possible to work with it using commands already familiar to the user from working with local resources. The consequence and at the same time the driving force of this progress was the emergence of a huge number of non-professional users who did not need to learn special (and quite complex) commands for network work. And local network developers got the opportunity to implement all these conveniences as a result of the emergence of high-quality cable communication lines, on which even first-generation network adapters provided data transfer rates of up to 10 Mbit/s.

Of course, the developers of global networks could not even dream of such speeds - they had to use the communication channels that were available, since laying new cable systems for computer networks thousands of kilometers long would require colossal capital investments. And “at hand” there were only telephone communication channels, poorly suited for high-speed transmission of discrete data - a speed of 1200 bps was a good achievement for them. Therefore, economical use of communication channel bandwidth has often been the main criterion for the effectiveness of data transmission methods in global networks. Under these conditions, various procedures for transparent access to remote resources, standard for local networks, for global networks have long remained an unaffordable luxury.

Modern tendencies

Today, computer networks continue to develop, and quite quickly. The gap between local and global networks is constantly narrowing, largely due to the emergence of high-speed territorial communication channels that are not inferior in quality to local network cable systems. In global networks, resource access services appear that are as convenient and transparent as local network services. Similar examples are demonstrated in large numbers by the most popular global network - the Internet.

Local networks are also changing. Instead of a passive cable connecting computers, a variety of communication equipment appeared in them in large quantities - switches, routers, gateways. Thanks to this equipment, it became possible to build large corporate networks, numbering thousands of computers and having a complex structure. There has been a resurgence of interest in large computers, largely because, after the euphoria over the ease of working with personal computers subsided, it became clear that systems consisting of hundreds of servers were more difficult to maintain than several large computers. Therefore, in a new round of the evolutionary spiral, mainframes began to return to corporate computing systems, but as full-fledged network nodes supporting Ethernet or Token Ring, as well as the TCP/IP protocol stack, which, thanks to the Internet, became a de facto network standard.

Another very important trend has emerged, affecting both local and global networks equally. They began to process information previously unusual for computer networks - voice, video images, drawings. This required changes to the operation of protocols, network operating systems and communications equipment. The difficulty of transmitting such multimedia information over a network is associated with its sensitivity to delays in the transmission of data packets - delays usually lead to distortion of such information at the end nodes of the network. Since traditional networking services such as file transfer or e-mail generate latency-insensitive traffic, and all network elements were designed with latency in mind, the advent of real-time traffic has created major problems.

Today, these problems are solved in various ways, including with the help of ATM technology specially designed for the transmission of various types of traffic. However, despite significant efforts being made in this direction, an acceptable solution to the problem is still far away, and much remains to be done in this area in order to achieve the cherished goal - the merging of technologies not only of local and global networks, but also the technologies of any information networks - computer, telephone, television, etc. Although today this idea seems like a utopia to many, serious experts believe that the prerequisites for such a synthesis are already exist, and their opinions differ only in assessing the approximate terms of such a merger - the terms are called from 10 to 25 years. Moreover, it is believed that the basis for unification will be the packet switching technology used today in computer networks, and not the circuit switching technology used in telephony, which should probably increase interest in networks of this type.

Network technologies of local networks

In local networks, as a rule, a shared data transmission medium (mono-channel) is used and the main role is played by protocols of the physical and data link layers, since these levels best reflect the specifics of local networks.

Network technology is an agreed set of standard protocols and software and hardware that implement them, sufficient to build a computer network. Network technologies are called core technologies or network architectures.

Network architecture determines the topology and method of access to the data transmission medium, the cable system or data transmission medium, the format of network frames, the type of signal encoding, and the transmission speed. In modern computer networks, such technologies or network architectures as: Ethernet, Token-Ring, ArcNet, FDDI have become widespread.

Network technologies IEEE802.3/Ethernet

Currently, this architecture is the most popular in the world. Popularity is ensured by simple, reliable and inexpensive technologies. A classic Ethernet network uses two types of standard coaxial cable (thick and thin).

However, the version of Ethernet that uses twisted pairs as a transmission medium has become increasingly widespread, since their installation and maintenance are much simpler. Ethernet networks use bus and passive star topologies, and the access method is CSMA/CD.

The IEEE802.3 standard, depending on the type of data transmission medium, has modifications:

10BASE5 (thick coaxial cable) - provides a data transfer rate of 10 Mbit/s and a segment length of up to 500 m;

10BASE2 (thin coaxial cable) - provides a data transfer rate of 10 Mbit/s and a segment length of up to 200 m;;

10BASE-T (unshielded twisted pair) - allows you to create a network using a star topology. The distance from the hub to the end node is up to 100m. The total number of nodes should not exceed 1024;

10BASE-F (fiber optic cable) - allows you to create a network using a star topology. The distance from the hub to the end node is up to 2000m.

In development of Ethernet technology, high-speed options have been created: IEEE802.3u/Fast Ethernet and IEEE802.3z/Gigabit Ethernet. The main topology used in Fast Ethernet and Gigabit Ethernet networks is passive star.

Fast Ethernet network technology provides a transmission speed of 100 Mbit/s and has three modifications:

100BASE-T4 - uses unshielded twisted pair (quad twisted pair). The distance from the hub to the end node is up to 100m;

100BASE-TX - uses two twisted pairs (unshielded and shielded). The distance from the hub to the end node is up to 100m;

100BASE-FX - uses fiber optic cable (two fibers in a cable). Distance from the hub to the end node is up to 2000m; .

Gigabit Ethernet – provides a transfer speed of 1000 Mbit/s. The following modifications of the standard exist:

1000BASE-SX - uses fiber optic cable with a light signal wavelength of 850 nm.

1000BASE-LX - uses fiber optic cable with a light signal wavelength of 1300 nm.

1000BASE-CX – uses shielded twisted pair cable.

1000BASE-T – uses quad unshielded twisted pair cable.

Fast Ethernet and Gigabit Ethernet networks are compatible with networks based on the Ethernet standard, so it is easy and simple to connect Ethernet, Fast Ethernet and Gigabit Ethernet segments into a single computer network.

The only drawback of this network is the lack of a guarantee of access time to the medium (and mechanisms providing priority service), which makes the network unpromising for solving real-time technological problems. Certain problems are sometimes created by the limitation on the maximum data field, equal to ~1500 bytes.

Different encoding schemes are used for different Ethernet speeds, but the access algorithm and frame format remain unchanged, which guarantees software compatibility.

The Ethernet frame has the format shown in Fig.

Ethernet Frame Format (the numbers at the top of the figure indicate the field size in bytes)

Field preamble contains 7 bytes 0xAA and serves to stabilize and synchronize the environment (alternating signals CD1 and CD0 with the final CD0), followed by the field SFD(start frame delimiter = 0xab), which is intended to detect the start of the frame. Field EFD(end frame delimiter) specifies the end of the frame. Checksum field ( CRC- cyclic redundancy check), as well as the preamble, SFD and EFD, are generated and controlled at the hardware level. Some modifications of the protocol do not use the efd field. The fields available to the user are starting from recipient addresses and ending with the field information, inclusive. After crc there is an interpacket gap (IPG - interpacket gap) of 9.6 μsec or more in length. The maximum frame size is 1518 bytes (preamble, SFD and EFD fields are not included). The interface scans all packets traveling along the cable segment to which it is connected, because it is possible to determine whether the received packet is correct and to whom it is addressed only by receiving it in its entirety. The correctness of the packet according to CRC, length and multiplicity of an integer number of bytes is made after checking the destination address.

When the computer is connected to the network directly using a switch, the restriction on the minimum frame length is theoretically removed. But working with shorter frames in this case will become possible only by replacing the network interface with a non-standard one (both for the sender and the recipient)!

If in the frame field protocol/type If the code is less than 1500, then this field characterizes the frame length. Otherwise, it is the protocol code whose packet is encapsulated in the Ethernet frame.

Access to the Ethernet channel is based on the algorithm CSMA/CD (carrier sense multiple access with collision detection).In Ethernet, any station connected to the network can attempt to start transmitting a packet (frame) if the cable segment to which it is connected is free. The interface determines whether a segment is free by the absence of a “carrier” for 9.6 μsec. Since the first bit of the packet does not reach the rest of the network stations simultaneously, it may happen that two or more stations attempt to transmit, especially since delays in repeaters and cables can reach quite large values. Such matches of attempts are called collisions. A collision is recognized by the presence of a signal in the channel, the level of which corresponds to the operation of two or more transceivers simultaneously. When a collision is detected, the station interrupts transmission. The attempt can be resumed after a delay (a multiple of 51.2 μs, but not exceeding 52 ms), the value of which is a pseudo-random variable and is calculated independently by each station (t= RAND(0.2 min(n,10)), where n - contents of the attempt counter, and the number 10 is backofflimit).

Typically, after a collision, time is divided into a number of discrete domains with a length equal to twice the packet's propagation time in the segment (RTT). For the maximum possible RTT, this time is 512 bit cycles. After the first collision, each station waits for 0 or 2 time domains before trying again. After the second collision, each station can wait 0, 1, 2 or 3 time domains, etc. After the nth collision, the random number lies in the range 0 - (2 n - 1). After 10 collisions, the maximum random shutter speed stops increasing and remains at 1023.

Thus, the longer the cable segment, the longer the average access time.

After waiting, the station increases the attempt counter by one and begins the next transmission. The default retry limit is 16; if the number of retries is reached, the connection is terminated and a corresponding message is displayed. The transmitted long frame helps to “synchronize” the start of packet transmission by several stations. Indeed, during the transmission time, with a noticeable probability, the need for transmission at two or more stations may arise. The moment they detect packet completion, the IPG timers will be enabled. Fortunately, information about the completion of packet transmission does not reach the stations of the segment at the same time. But the delays this entails also mean that the fact that one of the stations has started transmitting a new packet is not immediately known. If several stations are involved in a collision, they can notify the other stations by sending a jam signal (jam - at least 32 bits). The contents of these 32 bits are not regulated. This arrangement makes a repeat collision less likely. The source of a large number of collisions (in addition to information overload) can be the prohibitive total length of the logical cable segment, too many repeaters, a cable break, the absence of a terminator (50-ohm cable termination) or a malfunction of one of the interfaces. But collisions in themselves are not something negative - they are a mechanism that regulates access to the network environment.

In Ethernet, with synchronization, the following algorithms are possible:

A.

- If the channel is free, the terminal transmits a packet with probability 1.

- If the channel is busy, the terminal waits for it to become free and then transmits.

B.

- If the channel is free, the terminal transmits the packet.

- If the channel is busy, the terminal determines the time of the next transmission attempt. The time of this delay can be specified by some statistical distribution.

IN.

- If the channel is free, the terminal transmits the packet with probability p, and with probability 1-p it postpones the transmission for t seconds (for example, to the next time domain).

- When the attempt is repeated with a free channel, the algorithm does not change.

- If the channel is busy, the terminal waits until the channel is free, after which it acts again according to the algorithm in point 1.

Algorithm A seems attractive at first glance, but it contains the possibility of collisions with a probability of 100%. Algorithms B and C are more robust against this problem.

The effectiveness of the CSMA algorithm depends on how quickly the transmitting side finds out about the fact of a collision and interrupts the transmission, because continuation is pointless - the data is already damaged. This time depends on the length of the network segment and delays in the segment equipment. Twice the delay value determines the minimum length of a packet transmitted in such a network. If the packet is shorter, it can be transmitted without the sending party knowing it was damaged by the collision. For modern Ethernet local networks, built on switches and full-duplex connections, this problem is irrelevant

To clarify this statement, consider the case when one of the stations (1) transmits a packet to the most remote computer (2) in a given network segment. Let the signal propagation time to this machine be equal to T. Let us also assume that machine (2) tries to start transmitting just at the moment the packet arrives from station (1). In this case, station (1) learns about the collision only 2T after the start of transmission (the signal propagation time from (1) to (2) plus the collision signal propagation time from (2) to (1)). It should be taken into account that collision registration is an analog process and the transmitting station must “listen” to the signal in the cable during the transmission process, comparing the reading result with what it is transmitting. It is important that the signal encoding scheme allows collision detection. For example, the sum of two signals with level 0 will not allow this to be done. You might think that transmitting a short packet with corruption due to a collision is not such a big deal; delivery control and retransmission can solve the problem.

It should only be taken into account that retransmission in the event of a collision registered by the interface is carried out by the interface itself, and retransmission in the case of response delivery control is performed by the application process, requiring the resources of the workstation's central processor.

Double rotation time and collision detection

Clear recognition of collisions by all network stations is a necessary condition for the correct operation of the Ethernet network. If any transmitting station does not recognize the collision and decides that it transmitted the data frame correctly, then this data frame will be lost. Due to the overlap of signals during a collision, the frame information will be distorted, and it will be rejected by the receiving station (possibly due to a checksum mismatch). Most likely, the corrupted information will be retransmitted by some upper-layer protocol, such as a connection-oriented transport or application protocol. But the retransmission of the message by upper-level protocols will occur after a much longer time interval (sometimes even after several seconds) compared to the microsecond intervals that the Ethernet protocol operates. Therefore, if collisions are not reliably recognized by Ethernet network nodes, this will lead to a noticeable decrease in the useful throughput of this network.

For reliable collision detection, the following relationship must be satisfied:

T min >=PDV,

where T min is the transmission time of a frame of minimum length, and PDV is the time during which the collision signal manages to propagate to the farthest node in the network. Since in the worst case the signal must travel twice between the stations of the network that are most distant from each other (an undistorted signal passes in one direction, and a signal already distorted by a collision propagates on the way back), this time is called double revolution time (Path Delay Value, PDV).

If this condition is met, the transmitting station must be able to detect the collision caused by its transmitted frame even before it finishes transmitting this frame.

Obviously, the fulfillment of this condition depends, on the one hand, on the length of the minimum frame and network capacity, and on the other hand, on the length of the network cable system and the speed of signal propagation in the cable (this speed is slightly different for different types of cable).

All parameters of the Ethernet protocol are selected in such a way that during normal operation of network nodes, collisions are always clearly recognized. When choosing parameters, of course, the above relationship was taken into account, connecting the minimum frame length and the maximum distance between stations in a network segment.

The Ethernet standard assumes that the minimum length of a frame data field is 46 bytes (which, together with service fields, gives a minimum frame length of 64 bytes, and together with the preamble - 72 bytes or 576 bits). From here a limit on the distance between stations can be determined.

So, in 10 Mbit Ethernet, the minimum frame length transmission time is 575 bit intervals, therefore, the double turnaround time should be less than 57.5 μs. The distance that the signal can travel during this time depends on the type of cable and for a thick coaxial cable it is approximately 13,280 m. Considering that during this time the signal must travel along the communication line twice, the distance between two nodes should not be more than 6,635 m In the standard, the value of this distance is chosen to be significantly smaller, taking into account other, more stringent restrictions.

One of these restrictions is related to the maximum permissible signal attenuation. To ensure the required signal power when it passes between the most distant stations of a cable segment, the maximum length of a continuous segment of a thick coaxial cable, taking into account the attenuation it introduces, was chosen to be 500 m. Obviously, on a 500 m cable, the conditions for collision recognition will be met with a large margin for frames of any standard length, including 72 bytes (the double turnaround time along a 500 m cable is only 43.3 bit intervals). Therefore, the minimum frame length could be set even shorter. However, technology developers did not reduce the minimum frame length, keeping in mind multi-segment networks that are built from several segments connected by repeaters.

Repeaters increase the power of signals transmitted from segment to segment, as a result, signal attenuation is reduced and a much longer network can be used, consisting of several segments. In coaxial Ethernet implementations, designers have limited the maximum number of segments in the network to five, which in turn limits the total network length to 2500 meters. Even in such a multi-segment network, the collision detection condition is still met with a large margin (let us compare the distance of 2500 m obtained from the permissible attenuation condition with the maximum possible distance of 6635 m in terms of signal propagation time calculated above). However, in reality, the time margin is significantly less, since in multi-segment networks the repeaters themselves introduce an additional delay of several tens of bit intervals into the signal propagation. Naturally, a small margin was also made to compensate for deviations in cable and repeater parameters.

As a result of taking into account all these and some other factors, the ratio between the minimum frame length and the maximum possible distance between network stations was carefully selected, which ensures reliable collision recognition. This distance is also called the maximum network diameter.

As the frame transmission rate increases, which occurs in new standards based on the same CSMA/CD access method, such as Fast Ethernet, the maximum distance between network stations decreases in proportion to the increase in transmission rate. In the Fast Ethernet standard it is about 210 m, and in the Gigabit Ethernet standard it would be limited to 25 meters if the developers of the standard had not taken some measures to increase the minimum packet size.

PDV calculation

To simplify calculations, IEEE reference data is typically used to provide propagation delay values for repeaters, transceivers, and various physical media. In table Table 3.5 provides the data necessary to calculate the PDV value for all physical Ethernet network standards. The bit interval is designated bt.

Table 3.5.Data for calculating PDV value

The 802.3 Committee tried to simplify the calculations as much as possible, so the data presented in the table includes several stages of signal propagation. For example, the delays introduced by a repeater consist of the input transceiver delay, the repeater delay, and the output transceiver delay. However, in the table all these delays are represented by one value called the segment base. To avoid the need to add the delays introduced by the cable twice, the table gives double the delay values for each type of cable.

The table also uses concepts such as left segment, right segment and intermediate segment. Let us explain these terms using the example of the network shown in Fig. 3.13. The left segment is the segment in which the signal path begins from the transmitter output (output T x in Fig. 3.10) of the end node. In the example, this is a segment 1 . The signal then passes through intermediate segments 2-5 and reaches the receiver (input R x in Fig. 3.10) of the most distant node of the most distant segment 6, which is called the right one. It is here that, in the worst case, frames collide and a collision occurs, which is what is implied in the table.

Rice. 3.13.Example of an Ethernet network consisting of segments of different physical standards

Each segment has an associated constant delay, called the base, which depends only on the type of segment and on the position of the segment in the signal path (left, intermediate or right). The base of the right segment in which the collision occurs is much larger than the base of the left and intermediate segments.

In addition, each segment is associated with a signal propagation delay along the segment cable, which depends on the segment length and is calculated by multiplying the signal propagation time along one meter of cable (in bit intervals) by the cable length in meters.

The calculation consists of calculating the delays introduced by each cable segment (the signal delay per 1 m of cable given in the table is multiplied by the length of the segment), and then summing these delays with the bases of the left, intermediate and right segments. The total PDV value should not exceed 575.

Since the left and right segments have different base latency values, in the case of different types of segments at remote edges of the network, it is necessary to perform calculations twice: once taking a segment of one type as the left segment, and a second time taking a segment of another type. The result can be considered the maximum PDV value. In our example, the extreme network segments belong to the same type - the 10Base-T standard, so double calculation is not required, but if they were segments of different types, then in the first case it would be necessary to take the segment between the station and the hub as the left one 1 , and in the second, consider the segment between the station and the hub to be left 5 .

The network shown in the figure in accordance with the rule of 4 hubs is not correct - in the network between segment nodes 1 and 6 there are 5 hubs, although not all segments are lOBase-FB segments. In addition, the total network length is 2800 m, which violates the 2500 m rule. Let's calculate the PDV value for our example.

Left segment 1 / 15.3 (base) + 100 * 0.113= 26.6.

Intermediate segment 2/ 33,5 + 1000 * 0,1 = 133,5.

Intermediate segment 3/ 24 + 500 * 0,1 = 74,0.

Intermediate segment 4/ 24 + 500 * 0,1 = 74,0.

Intermediate segment 5/ 24 + 600 * 0,1 = 84,0.

Right segment 6 /165 + 100 * 0,113 = 176,3.

The sum of all components gives a PDV value of 568.4.

Since the PDV value is less than the maximum permissible value of 575, this network passes the double signal turnaround time criterion despite the fact that its total length is more than 2500 m and the number of repeaters is more than 4

PW calculation

To recognize the network configuration as correct, it is also necessary to calculate the reduction in the interframe interval by repeaters, that is, the PW value.

To calculate PW, you can also use the values of the maximum values for reducing the interframe interval when passing through repeaters of various physical environments, recommended by IEEE and given in Table. 3.6.

Table 3.6.Reducing the interframe interval by repeaters

In accordance with these data, we will calculate the PVV value for our example.

Left segment 1 10Base-T: 10.5 bt reduction.

Intermediate segment 2 10Base-FL: 8.

Intermediate segment 3 10Base-FB: 2.

Intermediate segment 4 10Base-FB: 2.

Intermediate segment 5 10Base-FB: 2.

The sum of these values gives a PW value of 24.5, which is less than the 49-bit interval limit.

As a result, the network shown in the example complies with Ethernet standards in all parameters related to both segment lengths and the number of repeaters

Maximum Ethernet Performance

The number of Ethernet frames processed per second is often specified by bridge/switch and router manufacturers as the primary performance characteristic of these devices. In turn, it is interesting to know the net maximum throughput of an Ethernet segment in frames per second in the ideal case when there are no collisions in the network and no additional delays introduced by bridges and routers. This indicator helps to assess the performance requirements of communication devices, since each device port cannot receive more frames per unit of time than the corresponding protocol allows.

For communications equipment, the most difficult mode is processing frames of minimal length. This is explained by the fact that a bridge, switch or router spends approximately the same time processing each frame, associated with viewing the packet forwarding table, forming a new frame (for the router), etc. And the number of frames of the minimum length arriving at the device per unit time, naturally more than frames of any other length. Another performance characteristic of communications equipment - bits per second - is used less frequently, since it does not indicate what size frames the device was processing, and it is much easier to achieve high performance, measured in bits per second, with frames of the maximum size.

Using the parameters given in table. 3.1, we calculate the maximum performance of an Ethernet segment in units such as the number of transmitted frames (packets) of minimum length per second.

NOTEWhen referring to network capacity, the terms frame and packet are usually used interchangeably. Accordingly, the units of performance measurement frames-per-second, fps and packets-per-second, pps are similar.

To calculate the maximum number of frames of minimum length passing over an Ethernet segment, note that the size of a frame of minimum length together with the preamble is 72 bytes or 576 bits (Fig. 3.5.), so its transmission takes 57.5 μs. By adding the interframe interval of 9.6 μs, we obtain that the period of repetition of frames of minimum length is 67.1 μs. Hence, the maximum possible throughput of an Ethernet segment is 14,880 fps.

Rice. 3.5.Towards calculating the throughput of the Ethernet protocol

Naturally, the presence of several nodes in a segment reduces this value due to waiting for access to the medium, as well as due to collisions leading to the need to retransmit frames.

The maximum length frames of Ethernet technology have a field length of 1500 bytes, which together with service information gives 1518 bytes, and with the preamble it amounts to 1526 bytes or 12,208 bits. The maximum possible throughput of an Ethernet segment for maximum length frames is 813 fps. Obviously, when working with large frames, the load on bridges, switches and routers is quite noticeably reduced.

Now let's calculate the maximum useful throughput in bits per second that Ethernet segments have when using frames of different sizes.

Under useful protocol bandwidth refers to the transmission rate of user data carried by the frame data field. This throughput is always less than the nominal bit rate of the Ethernet protocol due to several factors:

· frame service information;

· interframe intervals (IPG);

· waiting for access to the environment.

For frames of minimum length, the useful throughput is:

S P =14880 * 46 *8 = 5.48 Mbit/s.

This is much less than 10 Mbit/s, but it should be taken into account that frames of the minimum length are used mainly for transmitting receipts, so this speed has nothing to do with the transfer of actual file data.

For frames of maximum length, the usable throughput is:

S P = 813 * 1500 * 8 = 9.76 Mbit/s,

which is very close to the nominal speed of the protocol.

We emphasize once again that such speed can be achieved only in the case when two interacting nodes on an Ethernet network are not interfered with by other nodes, which is extremely rare,

Using medium-sized frames with a data field of 512 bytes, the network throughput will be 9.29 Mbps, which is also quite close to the maximum throughput of 10 Mbps.

ATTENTIONThe ratio of the current network throughput to its maximum throughput is called network utilization factor. In this case, when determining the current throughput, the transmission of any information over the network, both user and service, is taken into account. The coefficient is an important indicator for shared media technologies, since with the random nature of the access method, a high value of the utilization coefficient often indicates low useful network throughput (that is, the rate of transmission of user data) - nodes spend too much time on the procedure for gaining access and retransmitting frames after collisions.

In the absence of collisions and access waits, the network utilization factor depends on the size of the frame data field and has a maximum value of 0.976 when transmitting frames of maximum length. Obviously, in a real Ethernet network, the average network utilization can differ significantly from this value. More complex cases of determining network capacity, taking into account access waiting and handling collisions, will be discussed below.

Ethernet Frame Formats

The Ethernet technology standard, described in IEEE 802.3, describes a single MAC layer frame format. Since the MAC layer frame must contain an LLC layer frame, described in the IEEE 802.2 document, according to IEEE standards, only a single version of the link layer frame can be used in an Ethernet network, the header of which is a combination of the MAC and LLC sublayer headers.

However, in practice, Ethernet networks use frames of 4 different formats (types) at the data link level. This is due to the long history of the development of Ethernet technology, dating back to the period before the adoption of IEEE 802 standards, when the LLC sublayer was not separated from the general protocol and, accordingly, the LLC header was not used.

A consortium of three firms Digital, Intel and Xerox in 1980 submitted to the 802.3 committee their proprietary version of the Ethernet standard (which, of course, described a specific frame format) as a draft international standard, but the 802.3 committee adopted a standard that differed in some details from DIX offers. The differences also concerned the frame format, which gave rise to the existence of two different types of frames in Ethernet networks.

Another frame format emerged as a result of Novell's efforts to speed up its Ethernet protocol stack.

Finally, the fourth frame format was the result of the 802.2 committee's efforts to bring previous frame formats to some common standard.

Differences in frame formats can lead to incompatibility in the operation of hardware and network software designed to work with only one Ethernet frame standard. However, today almost all network adapters, network adapter drivers, bridges/switches and routers can work with all Ethernet technology frame formats used in practice, and frame type recognition is performed automatically.

Below is a description of all four types of Ethernet frames (here, a frame refers to the entire set of fields that relate to the data link layer, that is, the fields of the MAC and LLC layers). The same frame type can have different names, so below for each frame type are several of the most common names:

· 802.3/LLC frame (802.3/802.2 frame or Novell 802.2 frame);

· Raw 802.3 frame (or Novell 802.3 frame);

· Ethernet DIX frame (or Ethernet II frame);

· Ethernet SNAP frame.

The formats of all these four types of Ethernet frames are shown in Fig. 3.6.

conclusions

· Ethernet is the most common local network technology today. In a broad sense, Ethernet is an entire family of technologies that includes various proprietary and standard variants, of which the most famous are the proprietary DIX Ethernet variant, 10-Mbit variants of the IEEE 802.3 standard, as well as the new high-speed Fast Ethernet and Gigabit Ethernet technologies. Almost all types of Ethernet technologies use the same method of separating the data transmission medium - the CSMA/CD random access method, which defines the appearance of the technology as a whole.

· In a narrow sense, Ethernet is a 10-megabit technology described in the IEEE 802.3 standard.

· An important phenomenon in Ethernet networks is collision - a situation when two stations simultaneously try to transmit a data frame over a common medium. The presence of collisions is an inherent property of Ethernet networks, resulting from the random access method adopted. The ability to clearly recognize collisions is due to the correct choice of network parameters, in particular, compliance with the ratio between the minimum frame length and the maximum possible network diameter.

· The network performance characteristics are greatly influenced by the network utilization factor, which reflects its congestion. When this coefficient is above 50%, the useful network throughput drops sharply: due to an increase in the intensity of collisions, as well as an increase in the waiting time for access to the medium.

· The maximum possible throughput of an Ethernet segment in frames per second is achieved when transmitting frames of the minimum length and is 14,880 frames/s. At the same time, the useful network throughput is only 5.48 Mbit/s, which is only slightly more than half the nominal throughput - 10 Mbit/s.

· The maximum usable throughput of an Ethernet network is 9.75 Mbps, which corresponds to a maximum frame length of 1518 bytes transmitted over the network at 513 frames/s.

· In the absence of collisions and access waits utilization rate network depends on the size of the frame data field and has a maximum value of 0.96.

· Ethernet technology supports 4 different frame types that share a common host address format. There are formal characteristics by which network adapters automatically recognize the type of frame.

· Depending on the type of physical medium, the IEEE 802.3 standard defines various specifications: 10Base-5, 10Base-2, 10Base-T, FOIRL, 10Base-FL, 10Base-FB. For each specification, the cable type, the maximum lengths of continuous cable sections are determined, as well as the rules for using repeaters to increase the network diameter: the “5-4-3” rule for coaxial network options, and the “4-hub” rule for twisted pair and fiber optics.

· For a "mixed" network consisting of different types of physical segments, it is useful to calculate the total network length and the allowable number of repeaters. The IEEE 802.3 Committee provides input data for these calculations that indicate the delays introduced by repeaters of various physical media specifications, network adapters, and cable segments.

Network technologies IEEE802.5/Token-Ring

Token Ring networks, like Ethernet networks, are characterized by a shared data transmission medium, which in this case consists of cable segments connecting all network stations into a ring. The ring is considered as a common shared resource, and access to it requires not a random algorithm, as in Ethernet networks, but a deterministic one, based on transferring the right to use the ring to stations in a certain order. This right is conveyed using a special frame format called marker or token.

Token Ring networks operate at two bit rates - 4 and 16 Mbit/s. Mixing of stations operating at different speeds in the same ring is not allowed. Token Ring networks operating at 16 Mbps have some improvements in the access algorithm compared to the 4 Mbps standard.

Token Ring technology is a more sophisticated technology than Ethernet. It has fault tolerance properties. In the Token Ring network, procedures for monitoring the operation of the network are defined, which use feedback of a ring-shaped structure - the sent frame always returns to the station - the sender. In some cases, detected errors in the network operation are eliminated automatically, for example, a lost token can be restored. In other cases, errors are only recorded, and their elimination is carried out manually by maintenance personnel.

To control the network, one of the stations acts as a so-called active monitor. The active monitor is selected during ring initialization as the station with the maximum MAC address value. If the active monitor fails, the ring initialization procedure is repeated and a new active monitor is selected. In order for the network to detect the failure of the active monitor, the active monitor generates a special frame of its presence every 3 seconds in a healthy state. If this frame does not appear on the network for more than 7 seconds, then the remaining stations of the network begin the procedure for selecting a new active monitor.

Token Ring Frame Formats

There are three different frame formats in Token Ring:

· marker;

· data frame;

· interrupt sequence

Physical layer of Token Ring technology

The IBM Token Ring standard initially provided for the construction of connections in the network using hubs called MAU (Multistation Access Unit) or MSAU (Multi-Station Access Unit), that is, multiple access devices (Fig. 3.15). The Token Ring network can include up to 260 nodes.

Rice. 3.15.Physical configuration of the Token Ring network

A token ring hub can be active or passive. A passive hub simply interconnects ports internally so that stations connected to those ports form a ring. The passive MSAU does not perform signal amplification or resynchronization. Such a device can be considered a simple crossover unit with one exception - MSAU provides bypass of a port when the computer connected to this port is turned off. Such a function is necessary to ensure the connectivity of the ring, regardless of the state of the connected computers. Typically, the port is bypassed by relay circuits that are powered by DC from the AC adapter, and when the AC adapter is turned off, normally closed relay contacts connect the input of the port to its output.

An active hub performs signal regeneration functions and is therefore sometimes called a repeater, as in the Ethernet standard.

The question arises - if the hub is a passive device, then how is high-quality transmission of signals over long distances, which occurs when several hundred computers are connected to a network, ensured? The answer is that in this case each network adapter takes on the role of a signal amplifier, and the role of a resynchronization unit is performed by the network adapter of the active ring monitor. Each Token Ring network adapter has a repeater that can regenerate and resynchronize signals, but only the active monitor repeater in the ring performs the latter function.

The resynchronization block consists of a 30-bit buffer that receives Manchester signals with intervals somewhat distorted during a revolution around the ring. With the maximum number of stations in the ring (260), the variation in the delay of bit circulation around the ring can reach 3-bit intervals. An active monitor “inserts” its buffer into the ring and synchronizes the bit signals, outputting them at the required frequency.

In general, the Token Ring network has a combined star-ring configuration. End nodes are connected to the MSAU in a star topology, and the MSAUs themselves are combined through special Ring In (RI) and Ring Out (RO) ports to form a backbone physical ring.

All stations in the ring must operate at the same speed - either 4 Mbit/s or 16 Mbit/s. The cables connecting the station to the hub are called lobe cables, and the cables connecting the hubs are called trunk cables.

Token Ring technology allows you to use different types of cable to connect end stations and hubs: STP Type I, UTP Type 3, UTP Type 6, as well as fiber optic cable.

When using shielded twisted pair STP Type 1 from the IBM cable system range, up to 260 stations can be combined into a ring with a drop cable length of up to 100 meters, and when using unshielded twisted pair, the maximum number of stations is reduced to 72 with a drop cable length of up to 45 meters.

The distance between passive MSAUs can reach 100 m when using STP Type 1 cable and 45 m when using UTP Type 3 cable. Between active MSAUs, the maximum distance increases respectively to 730 m or 365 m depending on the cable type.

The maximum ring length of a Token Ring is 4000 m. The restrictions on the maximum ring length and the number of stations in a ring in Token Ring technology are not as strict as in Ethernet technology. Here, these restrictions are largely related to the time the marker turns around the ring (but not only - there are other considerations that dictate the choice of restrictions). So, if the ring consists of 260 stations, then with a marker holding time of 10 ms, the marker will return to the active monitor in the worst case after 2.6 s, and this time is exactly the marker rotation control timeout. In principle, all timeout values in the network adapters of the Token Ring network nodes are configurable, so it is possible to build a Token Ring network with more stations and a longer ring length.

conclusions

· Token Ring technology is developed primarily by IBM and also has IEEE 802.5 status, which reflects the most important improvements being made to IBM technology.

· Token Ring networks use a token access method, which guarantees that each station can access the shared ring within the token rotation time. Because of this property, this method is sometimes called deterministic.

· The access method is based on priorities: 0 (lowest) to 7 (highest). The station itself determines the priority of the current frame and can capture the ring only if there are no higher priority frames in the ring.

· Token Ring networks operate at two speeds: 4 and 16 Mbps and can use shielded twisted pair, unshielded twisted pair, and fiber optic cable as the physical media. The maximum number of stations in the ring is 260, and the maximum length of the ring is 4 km.

· Token Ring technology has elements of fault tolerance. Due to the feedback of the ring, one of the stations - the active monitor - continuously monitors the presence of the marker, as well as the rotation time of the marker and data frames. If the ring does not operate correctly, the procedure for its reinitialization is launched, and if this does not help, then the beaconing procedure is used to localize the faulty section of the cable or the faulty station.

· The maximum data field size of a Token Ring frame depends on the speed of the ring. For a speed of 4 Mbit/s it is about 5000 bytes, and at a speed of 16 Mbit/s it is about 16 KB. The minimum size of the frame data field is not defined, that is, it can be equal to 0.

· In the Token Ring network, stations are connected into a ring using hubs called MSAUs. The MSAU passive hub acts as a crossover panel that connects the output of the previous station in the ring to the input of the next one. The maximum distance from the station to the MSAU is 100 m for STP and 45 m for UTP.

· An active monitor also acts as a repeater in the ring - it resynchronizes signals passing through the ring.

· The ring can be built on the basis of an active MSAU hub, which in this case is called a repeater.

· The Token Ring network can be built on the basis of several rings separated by bridges that route frames based on the “from the source” principle, for which a special field with the route of the rings is added to the Token Ring frame.

Network technologies IEEE802.4/ArcNet

The ArcNet network uses a “bus” and a “passive star” as its topology. Supports shielded and unshielded twisted pair and fiber optic cable. The ArcNet network uses a delegation method to access the media. The ArcNet network is one of the oldest networks and has been very popular. Among the main advantages of the ArcNet network are high reliability, low cost of adapters and flexibility. The main disadvantage of the network is the low speed of information transfer (2.5 Mbit/s). The maximum number of subscribers is 255. The maximum network length is 6000 meters.

Network technology FDDI (Fiber Distributed Data Interface)

FDDI–a standardized specification for a network architecture for high-speed data transmission over fiber optic lines. Transfer speed – 100 Mbit/s. This technology is largely based on the Token-Ring architecture and uses deterministic token access to the data transmission medium. The maximum length of the network ring is 100 km. The maximum number of network subscribers is 500. The FDDI network is a very highly reliable network, which is created on the basis of two fiber optic rings that form the main and backup data transmission paths between nodes.

Main characteristics of the technology

FDDI technology is largely based on Token Ring technology, developing and improving its basic ideas. The developers of FDDI technology set themselves the following goals as their highest priority:

· increase the bit rate of data transfer to 100 Mbit/s;

· increase the fault tolerance of the network through standard procedures for restoring it after various types of failures - cable damage, incorrect operation of a node, hub, high levels of interference on the line, etc.;

· make the most of potential network bandwidth for both asynchronous and synchronous (latency-sensitive) traffic.

The FDDI network is built on the basis of two fiber optic rings, which form the main and backup data transmission paths between network nodes. Having two rings is the primary way to increase fault tolerance in an FDDI network, and nodes that want to take advantage of this increased reliability potential must be connected to both rings.

In normal network operation mode, data passes through all nodes and all cable sections of the Primary ring only; this mode is called the Thru- “end-to-end” or “transit”. The Secondary ring is not used in this mode.

In the event of some type of failure where part of the primary ring cannot transmit data (for example, a broken cable or node failure), the primary ring is combined with the secondary ring (Figure 3.16), again forming a single ring. This mode of network operation is called Wrap, that is, the "folding" or "folding" of the rings. The collapse operation is performed using FDDI hubs and/or network adapters. To simplify this procedure, data on the primary ring is always transmitted in one direction (in the diagrams this direction is shown counterclockwise), and on the secondary ring in the opposite direction (shown clockwise). Therefore, when a common ring of two rings is formed, the transmitters of the stations still remain connected to the receivers of neighboring stations, which allows information to be correctly transmitted and received by neighboring stations.

Rice. 3.16.Reconfiguration of FDDI rings upon failure

FDDI standards place a lot of emphasis on various procedures that allow you to determine if there is a fault in the network and then make the necessary reconfiguration. The FDDI network can fully restore its functionality in the event of single failures of its elements. When there are multiple failures, the network splits into several unconnected networks. FDDI technology complements the failure detection mechanisms of Token Ring technology with mechanisms for reconfiguring the data transmission path in the network, based on the presence of redundant links provided by the second ring.

Rings in FDDI networks are considered as a common shared data transmission medium, so a special access method is defined for it. This method is very close to the access method of Token Ring networks and is also called the token ring method.

The differences in the access method are that the token holding time in the FDDI network is not a constant value, as in the Token Ring network. This time depends on the load on the ring - with a small load it increases, and with large overloads it can decrease to zero. These changes in the access method only affect asynchronous traffic, which is not critical to small delays in frame transmission. For synchronous traffic, the token hold time is still a fixed value. A frame priority mechanism similar to that adopted in Token Ring technology is absent in FDDI technology. The technology developers decided that dividing traffic into 8 priority levels is redundant and it is enough to divide the traffic into two classes - asynchronous and synchronous, the latter of which is always serviced, even when the ring is overloaded.

Otherwise, frame forwarding between ring stations at the MAC level is fully compliant with Token Ring technology. FDDI stations use an early token release algorithm, similar to Token Ring networks with a speed of 16 Mbps.

MAC level addresses are in a standard format for IEEE 802 technologies. The FDDI frame format is close to the Token Ring frame format; the main differences are the absence of priority fields. Signs of address recognition, frame copying and errors allow you to preserve the procedures for processing frames available in Token Ring networks by the sending station, intermediate stations and the receiving station.

On fig. Figure 3.17 shows the correspondence of the protocol structure of FDDI technology to the seven-layer OSI model. FDDI defines the physical layer protocol and the media access sublayer (MAC) protocol of the data link layer. Like many other local area network technologies, FDDI technology uses the LLC data link control sublayer protocol defined in the IEEE 802.2 standard. Thus, although FDDI technology was developed and standardized by ANSI and not by IEEE, it fits entirely within the framework of the 802 standards.

Rice. 3.17.Structure of FDDI technology protocols

A distinctive feature of FDDI technology is the station control level - Station Management (SMT). It is the SMT layer that performs all the functions of managing and monitoring all other layers of the FDDI protocol stack. Each node in the FDDI network takes part in managing the ring. Therefore, all nodes exchange special SMT frames to manage the network.

Fault tolerance of FDDI networks is ensured by protocols of other layers: with the help of the physical layer, network failures for physical reasons, for example, due to a broken cable, are eliminated, and with the help of the MAC layer, logical network failures are eliminated, for example, the loss of the required internal path for transmitting a token and data frames between hub ports .

conclusions

· FDDI technology was the first to use fiber optic cable in local area networks and operate at 100 Mbps.

· There is significant continuity between Token Ring and FDDI technologies: both are characterized by a ring topology and a token access method.

· FDDI technology is the most fault-tolerant local network technology. In case of single failures of the cable system or station, the network, due to the “folding” of the double ring into a single one, remains fully operational.

· The FDDI token access method operates differently for synchronous and asynchronous frames (the frame type is determined by the station). To transmit a synchronous frame, a station can always capture an incoming token for a fixed time. To transmit an asynchronous frame, a station can capture a token only if the token has completed a rotation around the ring quickly enough, which indicates that there is no ring congestion. This access method, firstly, gives preference to synchronous frames, and secondly, regulates the ring load, slowing down the transmission of non-urgent asynchronous frames.

· FDDI technology uses fiber optic cables and Category 5 UTP as the physical medium (this physical layer option is called TP-PMD).

· The maximum number of dual connection stations in a ring is 500, the maximum diameter of a double ring is 100 km. The maximum distances between adjacent nodes for multimode cable are 2 km, for twisted pair UPT category 5-100 m, and for single-mode optical fiber depend on its quality

Network technology is a coordinated set of standard protocols and software and hardware that implement them, sufficient for building computer networks.

Protocol is a set of rules and agreements that determine how devices on a network exchange data.

Currently, the following network technologies dominate: Ethernet, Token Ring, FDDI, ATM.

Ethernet technology

Ethernet technology was created by XEROX in 1973. The basic principle underlying Ethernet is a random method of access to a shared data transmission medium (multiple access method).

The logical topology of an Ethernet network is always bus, so data is transmitted to all network nodes. Each node sees each transmission and distinguishes the data intended for it by the address of its network adapter. At any given time, only one node can carry out a successful transmission, so there must be some kind of agreement between the nodes on how they can use the same cable together so as not to interfere with each other. This agreement defines the Ethernet standard.

As the network load increases, the need to transmit data at the same time becomes increasingly necessary. When this happens, the two transmissions come into conflict, filling the bus with information garbage. This behavior is known under the term “collision,” that is, the occurrence of a conflict.

Each transmitting system, upon detecting a collision, immediately stops sending data and action is taken to correct the situation.

Although most collisions that occur on a typical Ethernet network are resolved within microseconds and their occurrence is natural and expected, the main disadvantage is that the more traffic on the network, the more collisions, the more network performance drops sharply and collapse may occur. that is, the network is clogged with traffic.

Traffic– flow of messages in a data network.

Token Ring Technology

Token Ring technology was developed by IBM in 1984. Token Ring technology uses a completely different access method. The Token Ring logical network has a ring topology. A special message, known as a Token, is a special three-byte packet that constantly circulates around the logical ring in one direction. When a token passes through a node ready to send data to the network, it grabs the token, attaches the data to be sent to it, and then passes the message back to the ring. The message continues its “journey” around the ring until it reaches its destination. Until the message is received, no node will be able to forward data. This access method is known as token passing. It eliminates collisions and random latency periods like Ethernet.

FDDI technology

FDDI (Fiber Distributed Data Interface) technology – fiber optic distributed data interface – is the first local network technology in which the data transmission medium is fiber optic cable. FDDI technology is largely based on Token Ring technology, developing and improving its basic ideas. The FDDI network is built on the basis of two fiber optic rings, which form the main and backup data transmission paths between network nodes. Having two rings is the primary way to increase fault tolerance in an FDDI network, and nodes that want to take advantage of this increased reliability potential must be connected to both rings.

In normal network operation mode, data passes through all nodes and all cable sections of the primary ring only; the secondary ring is not used in this mode. In the event of some type of failure where part of the primary ring cannot transmit data (for example, a broken cable or node failure), the primary ring is combined with the secondary ring, again forming a single ring.

Rings in FDDI networks are considered as a common data transmission medium, therefore a special access method is defined for it, very close to the access method of Token Ring networks. The difference is that the token retention time in the FDDI network is not a constant value, as in Token Ring. It depends on the ring load - with a light load it increases, and with large congestions it can decrease to zero for asynchronous traffic. For synchronous traffic, the token holding time remains a fixed value.

ATM technology

ATM (Asynchronous Transfer Mode) is the most modern network technology. It is designed to transmit voice, data and video using a high-speed, connection-oriented cell switching protocol.

Unlike other technologies, ATM traffic is divided into 53-byte cells (cells). Using a predefined size data structure makes network traffic more easily quantifiable, predictable, and manageable. ATM is based on transmitting information over a fiber optic cable using a star topology.

Modern network technologies

Plan

What is a local network?

Computer network hardware. Local area network topologies

Physical topologies of local area networks

Logical topologies of local area networks

Connectors and sockets

Coaxial cable

twisted pair

Transmitting information via fiber optic cables

Communication equipment

Wireless network equipment and technologies

Technologies and protocols of local area networks

Addressing computers on the network and basic network protocols

Network facilities of MS Windows operating systems

Network Resource Management Concepts

Capabilities of the MS Windows family of operating systems for organizing work in a local network

Configuring network component settings

Configuring connection settings

Connecting a network printer

Connecting a network drive

What is a local network?

The problem of transferring information from one computer to another has existed since the advent of computers. To solve it, various approaches were used. The most common “courier” approach in the recent past was to copy information onto removable media (GMD, CD, etc.), transfer it to the destination and copy it again, but from the removable media to the recipient’s computer. Currently, such methods of moving information are giving way to network technologies. Those. computers are connected to each other in some way, and the user is able to transfer information to its destination without leaving the desk.

A set of computer devices that have the ability to communicate with each other is usually called a computer network. In most cases, there are two types of computer networks: local (LAN - LocalAreaNetwork) and global (WAN - Wide-AreaNetwork). In some classification options, a number of additional types are considered: urban, regional, etc., however, all these types (in essence) in most cases are variants of global networks of various scales. The most common option is to classify networks into local and global based on geography. Those. In this case, a local computer network is understood as a collection of a finite number of computers located in a limited area (within one building or neighboring buildings), connected by information channels that have high speed and reliability of data transmission and are designed to solve a set of interrelated problems.

Computer network hardware. Local area network topologies

All computers of subscribers (users) working within the local area network must be able to interact with each other, i.e. be connected with each other. The way such connections are organized significantly affects the characteristics of the local computer network and is called its topology (architecture, configuration). There are physical and logical topologies. The physical topology of a local area network refers to the physical placement of the computers that are part of the network and the way they are connected to each other by conductors. The logical topology determines the way information flows and very often does not coincide with the selected physical topology for connecting local area network subscribers.

Physical topologies of local area networks

There are four main physical topologies used in building local area networks.